Git is the source code version control system that is rapidly becoming the standard for open source projects. It has a powerful distributed model which allows advanced users to do tricky things with branches, and rewriting history. What a pity that it’s so hard to learn, has such an unpleasant command line interface, and treats its users with such utter contempt.

1. Complex information model

The information model is complicated – and you need to know all of it. As a point of reference, consider Subversion: you have files, a working directory, a repository, versions, branches, and tags. That’s pretty much everything you need to know. In fact, branches are tags, and files you already know about, so you really need to learn three new things. Versions are linear, with the odd merge. Now Git: you have files, a working tree, an index, a local repository, a remote repository, remotes (pointers to remote repositories), commits, treeishes (pointers to commits), branches, a stash… and you need to know all of it.

2. Crazy command line syntax

The command line syntax is completely arbitrary and inconsistent. Some “shortcuts” are graced with top level commands: “git pull” is exactly equivalent to “git fetch” followed by “git merge”. But the shortcut for “git branch” combined with “git checkout”? “git checkout -b”. Specifying filenames completely changes the semantics of some commands (“git commit” ignores local, unstaged changes in foo.txt; “git commit foo.txt” doesn’t). The various options of “git reset” do completely different things.

The most spectacular example of this is the command “git am”, which as far as I can tell, is something Linus hacked up and forced into the main codebase to solve a problem he was having one night. It combines email reading with patch applying, and thus uses a different patch syntax (specifically, one with email headers at the top).

3. Crappy documentation

The man pages are one almighty “fuck you”. They describe the commands from the perspective of a computer scientist, not a user. Case in point:

git-push – Update remote refs along with associated objects

Here’s a description for humans: git-push – Upload changes from your local repository into a remote repository

Update, another example: (thanks cgd)

git-rebase – Forward-port local commits to the updated upstream head

Translation: git-rebase – Sequentially regenerate a series of commits so they can be applied directly to the head node

4. Information model sprawl

Remember the complicated information model in step 1? It keeps growing, like a cancer. Keep using Git, and more concepts will occasionally drop out of the sky: refs, tags, the reflog, fast-forward commits, detached head state (!), remote branches, tracking, namespaces

5. Leaky abstraction

Git doesn’t so much have a leaky abstraction as no abstraction. There is essentially no distinction between implementation detail and user interface. It’s understandable that an advanced user might need to know a little about how features are implemented, to grasp subtleties about various commands. But even beginners are quickly confronted with hideous internal details. In theory, there is the “plumbing” and “the porcelain” – but you’d better be a plumber to know how to work the porcelain.

A common response I get to complaints about Git’s command line complexity is that “you don’t need to use all those commands, you can use it like Subversion if that’s what you really want”. Rubbish. That’s like telling an old granny that the freeway isn’t scary, she can drive at 20kph in the left lane if she wants. Git doesn’t provide any useful subsets – every command soon requires another; even simple actions often require complex actions to undo or refine.

Here was the (well-intentioned!) advice from a GitHub maintainer of a project I’m working on (with apologies!):

- Find the merge base between your branch and master: ‘git merge-base master yourbranch’

- Assuming you’ve already committed your changes, rebased your commit onto the merge base, then create a new branch:

- git rebase –onto <basecommit> HEAD~1 HEAD

- git checkout -b my-new-branch

- Checkout your ruggedisation branch, and remove the commit you just rebased: ‘git reset –hard HEAD~1’

- Merge your new branch back into ruggedisation: ‘git merge my-new-branch’

- Checkout master (‘git checkout master’), merge your new branch in (‘git merge my-new-branch’), and check it works when merged, then remove the merge (‘git reset –hard HEAD~1’).

- Push your new branch (‘git push origin my-new-branch’) and log a pull request.

Translation: “It’s easy, Granny. Just rev to 6000, dump the clutch, and use wheel spin to get round the first corner. Up to third, then trail brake onto the freeway, late apexing but watch the marbles on the inside. Hard up to fifth, then handbrake turn to make the exit.”

6. Power for the maintainer, at the expense of the contributor

Most of the power of Git is aimed squarely at maintainers of codebases: people who have to merge contributions from a wide number of different sources, or who have to ensure a number of parallel development efforts result in a single, coherent, stable release. This is good. But the majority of Git users are not in this situation: they simply write code, often on a single branch for months at a time. Git is a 4 handle, dual boiler espresso machine – when all they need is instant.

Interestingly, I don’t think this trade-off is inherent in Git’s design. It’s simply the result of ignoring the needs of normal users, and confusing architecture with interface. “Git is good” is true if speaking of architecture – but false of user interface. Someone could quite conceivably write an improved interface (easygit is a start) that hides unhelpful complexity such as the index and the local repository.

7. Unsafe version control

The fundamental promise of any version control system is this: “Once you put your precious source code in here, it’s safe. You can make any changes you like, and you can always get it back”. Git breaks this promise. Several ways a committer can irrevocably destroy the contents of a repository:

- git add . / … / git push -f origin master

- git push origin +master

- git rebase -i <some commit that has already been pushed and worked from> / git push

8. Burden of VCS maintainance pushed to contributors

In the traditional open source project, only one person had to deal with the complexities of branches and merges: the maintainer. Everyone else only had to update, commit, update, commit, update, commit… Git dumps the burden of understanding complex version control on everyone – while making the maintainer’s job easier. Why would you do this to new contributors – those with nothing invested in the project, and every incentive to throw their hands up and leave?

9. Git history is a bunch of lies

The primary output of development work should be source code. Is a well-maintained history really such an important by-product? Most of the arguments for rebase, in particular, rely on aesthetic judgments about “messy merges” in the history, or “unreadable logs”. So rebase encourages you to lie in order to provide other developers with a “clean”, “uncluttered” history. Surely the correct solution is a better log output that can filter out these unwanted merges.

10. Simple tasks need so many commands

The point of working on an open source project is to make some changes, then share them with the world. In Subversion, this looks like:

- Make some changes

- svn commit

If your changes involve creating new files, there’s a tricky extra step:

- Make some changes

- svn add

- svn commit

For a Github-hosted project, the following is basically the bare minimum:

- Make some changes

- git add [not to be confused with svn add]

- git commit

- git push

- Your changes are still only halfway there. Now login to Github, find your commit, and issue a “pull request” so that someone downstream can merge it.

In reality though, the maintainer of that Github-hosted project will probably prefer your changes to be on feature branches. They’ll ask you to work like this:

- git checkout master [to make sure each new feature starts from the baseline]

- git checkout -b newfeature

- Make some changes

- git add [not to be confused with svn add]

- git commit

- git push

- Now login to Github, switch to your newfeature branch, and issue a “pull request” so that the maintainer can merge it.

So, to move your changes from your local directory to the actual project repository will be: add, commit, push, “click pull request”, pull, merge, push. (I think)

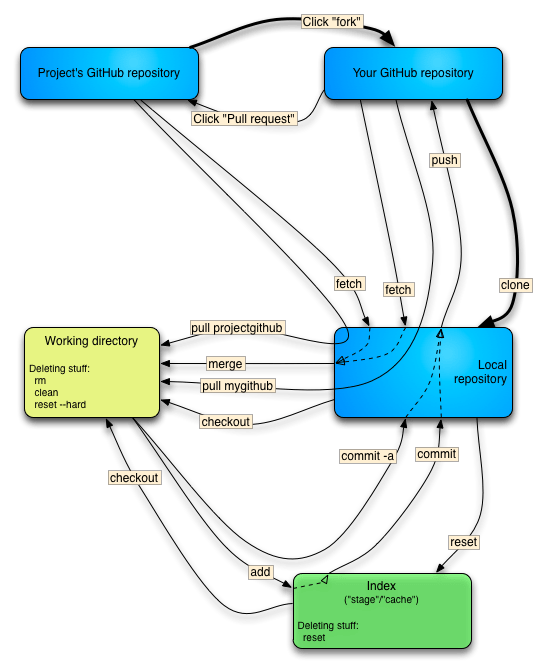

As an added bonus, here’s a diagram illustrating the commands a typical developer on a traditional Subversion project needed to know about to get their work done. This is the bread and butter of VCS: checking out a repository, committing changes, and getting updates.

“Bread and butter” commands and concepts needed to work with a remote Subversion repository.

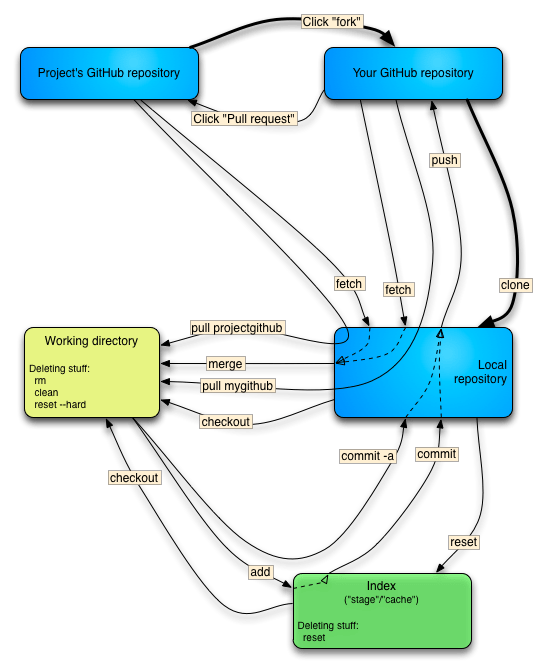

And now here’s what you need to deal with for a typical Github-hosted project:

The “bread and butter” commands and concepts needed to work with a Github-hosted project.

If the power of Git is sophisticated branching and merging, then its weakness is the complexity of simple tasks.

Update (August 3, 2012)

This post has obviously struck a nerve, and gets a lot of traffic. Thought I’d address some of the most frequent comments.

- The comparison between a Subversion repository with commit access and a Git repository without it isn’t fair True. But that’s been my experience: most SVN repositories I’ve seen have many committers – it works better that way. Git (or at least Github) repositories tend not to: you’re expected to submit pull requests, even after you reach the “trusted” stage. Perhaps someone else would like to do a fairer apples-to-apples comparison.

- You’re just used to SVN There’s some truth to this, even though I haven’t done a huge amount of coding in SVN-based projects. Git’s commands and information model are still inherently difficult to learn, and the situation is not helped by using Subversion command names with different meanings (eg, “svn add” vs “git add”).

- But my life is so much better with Git, why are you against it? I’m not – I actually quite like the architecture and what it lets you do. You can be against a UI without being against the product.

- But you only need a few basic commands to get by. That hasn’t been my experience at all. You can just barely survive for a while with clone, add, commit, and checkout. But very soon you need rebase, push, pull, fetch , merge, status, log, and the annoyingly-commandless “pull request”. And before long, cherry-pick, reflog, etc etc…

- Use Mercurial instead! Sure, if you’re the lucky person who gets to choose the VCS used by your project.

- Subversion has even worse problems! Probably. This post is about Git’s deficiencies. Subversion’s own crappiness is no excuse.

- As a programmer, it’s worth investing time learning your tools. True, but beside the point. The point is, the tool is hard to learn and should be improved.

- If you can’t understand it, you must be dumb. True to an extent, but see the previous point.

- There’s a flaw in point X. You’re right. As of writing, over 80,000 people have viewed this post. Probably over 1000 have commented on it, on Reddit (530 comments), on Hacker News (250 comments), here (100 comments). All the many flaws, inaccuracies, mischaracterisations, generalisations and biases have been brought to light. If I’d known it would be so popular, I would have tried harder. Overall, the level of debate has actually been pretty good, so thank you all.

A few bonus command inconsistencies:

Reset/checkout

To reset one file in your working directory to its committed state:

git checkout file.txt

To reset every file in your working directory to its committed state:

git reset --hard

Remotes and branches

git checkout remotename/branchname

git pull remotename branchname

There’s another command where the separator is remotename:branchname, but I don’t recall right now.

Command options that are practically mandatory

And finally, a list of commands I’ve noticed which are almost useless without additional options.

| Base command |

Useless functionality |

Useful command |

Useful functionality |

| git branch foo |

Creates a branch but does nothing with it |

git checkout -b foo |

Creates branch and switches to it |

| git remote |

Shows names of remotes |

git remote -v |

Shows names and URLs of remotes |

| git stash |

Stores modifications to tracked files, then rolls them back |

git stash -u |

Also does the same to untracked files |

| git branch |

Lists names of local branches |

git branch -rv |

Lists local and remote tracking branches; shows latest commit message |

| git rebase |

Destroy history blindfolded |

git rebase -i |

Lets you rewrite the upstream history of a branch, choosing which commits to keep, squash, or ditch. |

| git reset foo |

Unstages files |

git reset –hard

git reset –soft |

Discards local modifications

Returns to another commit, but doesn’t touch working directory. |

| git add |

Nothing – prints warning |

git add .

git add -A |

Stages all local modifications/additions

Stages all local modifications/additions/deletions |

Update 2 (September 3, 2012)

A few interesting links:

#7 only irrevocably destroys data in the remote repo if you 1) have no direct way to interact with it (eg. GitHub), 2) nobody has cloned the data in question and 3) if you don’t have your local repo to recover it from again.

As long as (3) doesn’t apply, you can run `git fsck –unreachable` to recover your lost commits.

Git is not meant to be user friendly or uncomplicated to beginners. Anybody who tells you otherwise is pulling your leg. Mercurial is meant to be (and still survives because of it), but it’s not quite as powerful as a result. Bazaar complicates things even more for reasons that escape me.

Subversion doesn’t even count, because anybody can write a simple VCS if they don’t have to make it distributed. (Just like anybody can build a simple cluster, so long as it doesn’t have to be fault-tolerant.)

Tim, you assume (as I think many Git users and developers do) that power and user-friendliness are somehow mutually incompatible. I don’t think Git is hard to use because it’s powerful. I think it’s hard to use because its developers never tried, and because they don’t value good user interfaces – including command lines. Git doesn’t say “sorry about the complexity, we’ve done everything we can to make it easy”, it says “Git’s hard, deal with it”.

Here’s an interesting example: “git stash”, until a recent version, was basically broken: it left untracked files behnid, even though its man page stated that it left a “clean working directory”. They’ve now added a new option, “git stash -u”, which behaves the way git stash should always have worked. Now, making “git stash” perform incorrectly without the extra option is not “more powerful” – it’s just that they don’t value a sensible, intuitive user interface high enough to be willing to change the default behaviour from one version to the next.

I also think the mess that is Git’s interface arose because DVCS was basically a new concept and they didn’t know all the features it would need. If they’d known about rebase at the start, it would have been more deeply integrated, something like “push –linear-merge”.

(And your point about recoverability of “unrecoverable” Git commits is well made.)

Steve, I didn’t mean to imply that powerful and user-friendliness are mutually incompatible. I completely agree that Git really doesn’t even try.

I’ll come back to Mercurial (Hg) here to counterpoint – it’s the rival project, and it tries to be usable first, and powerful second. It does most of the things Git does, but tries to do it without hurting beginners. If the command is dangerous, you have to enable it on first. A good example is “rebase”, because it destroys history (though by default there’s a local backup). The equivalent to the Git “clean” command, “hg purge” is likewise an “only if enabled” command.

There’s a trade-off though. There are things in Git, like “replace”, that will never be user-friendly because of what they do. In many cases Mercurial doesn’t even provide them, because a work-around exists and adding excessive numbers of commands works against their “user-friendly” aim.

In Hg, you can’t remove or hide remote commits. Period. You want to rebase and push those commits again? You’ll need remote login access to strip the previous commits first. (BitBucket now provides a user interface for doing this.) Git makes it very easy to do this, on the assumption that you “know what you’re doing”. Hg makes it hard to do stupid things (like blow away public history), because it assumes you probably don’t.

At my last job, I picked Hg for our developers for precisely this reason. I was very happy with it, and used to think all the Git enthusiasts nuts. I used to loath Git’s “shiny with sharp edges” nature. However after using it extensively it really is a better expert tool. I think the reason it’ll never become more user-friendly is because nobody who develops Git is interested in usability – there are other options for those that want them.

Oh, and “rebase” is a separate command in both Hg & Git for a very good reason – it always modifies history, unlike a merge (which doesn’t ever modify history).

I totally agree with you that they didn’t try on the user interface, and that they were still discovering some of the features they needed out of DVCS.

However, I think that the reason they didn’t focus on the UI was for the sake of the developers who would use the tool (and because they ate their own dog food). I would argue that the complex set of commands, and even some of the inconsistency, is for the sake of easy extension and integration. I think the set of commands is complex because they act almost as an API for applications to interface with Git, in a way similar to how many standard Unix utilities are useful for programming. The lack of abstraction leading to complexity is planned, I think, so that applications can have more control over how they interface. The inconsistencies are likely there so that they don’t break any existing applications which may depend on certain functionality (even if it isn’t to spec).

Basically, Git is not intended for those who don’t want to learn a new, complex tool. It’s intended for developers. Not planning for it’s wide-spread adoption may have been a fault of the project. However, I think the API-like interface also provides opportunities that would not be available had Git gone with a clean, abstracted interface.

“Basically, Git is not intended for those who don’t want to learn a new, complex tool. It’s intended for developers.”

I think your assumption that developers want to, or at least can be expected to, spend time learning developer tools is misguided. It was more reasonable 20 years ago. These days, we have so many tools and technologies to deal with, that they all must be made as learnable and intuitive as possible.

I don’t feel like your git stash example is entirely fair.

Nowhere in any of the git documentation does it say that it will operate on untracked files other than in the documentation for git add and commands which do an implicit add. git stash worked exactly the way it was described. Just like the vast majority of the git commands they work on the working directory’s tracked files. Not every file in the working directory regardless of if it is tracked or not.

Honestly if git stash worked the way you describe (the -u option being the default) it wouldn’t work the way I wanted it to and I’d be much more hesitant to use it!

To say that git stash performed incorrectly is to push your own opinion on what the correct action of stash should be while ignoring the way that git operates.

If git stash -u was the default you probably still wouldn’t have liked it. You’d just cherry pick it as a completely different example. I could see you having written something like the following:

“Yet another example of the inconsistency in git is ‘git stash’. Where most of the commands in git operate on a set of tracked files in the working directory, git stash works on the working directory as a whole. This can cause confusion when you end up stashing and essentially removing hidden or meta-data files in your source code which weren’t meant to be tracked by version control as a side effect of temporarily stashing some changes.”

It feels a little bit like you’ve started with the conclusion “I hate git because it is inconsistent and the commands are needlessly confusing” and are searching for examples to prove it. Instead the better action would be trying to learn how git is used as git, why it works for the developers using it, and forming a conclusion afterwards.

That said, it does seem like you have in fact given git a try. I’m not sure the above characterization is entirely fair… but it is the impression I get from the cherry picked examples with no care given to how it fits into the workflow of a developer using git.

I disagree that Mercurial is less powerful than Git. Out of the box, it’s barely crippled due to the more advanced features being disabled by default; once enabled Mercurial is more powerful than Git, while still maintaining the ease-of-use of before.

Check out Mercurial Patch Queues. http://stevelosh.com/blog/2010/08/a-git-users-guide-to-mercurial-queues/

“Subversion doesn’t even count, because anybody can write a simple VCS if they don’t have to make it distributed.” You truly have no idea what you’re talking about. it was a wholly different world when Karl Fogel and company wrote SVN. You seem to have no concept of how difficult it is was to create SVN in a world where there were few good tools, very little OSS, and a much more limited concept of VCS. That SVN succeeded so well at the task you dismiss out of hand is corroborated by its wide and enduring popularity.

basically you want git to bend to your will while thousand use it and don’t agree with you.

to bad :)

You put your comments in an excellent and well-balanced way as usual – a pleasure to read. I use and enjoy using git every day but it’s by no means my idea of an ideal tool & your comments rightly target both the inarguable shortcomings and the closed-minded defensiveness of the UNIX community.

I particularly have sympathy with your humorous summary “Git’s hard, deal with it.” It reminds me of a colleague who once remarked “Unix – you want friendly? Get a dog.”

For me, I have found SmartGit/Hg to be a wonderful utility that has opened up the power of git to me without screwing up my projects & it means I can basically forget about the command line for almost everything. I’ve tried many of other tools in anger and, for what is it worth, I think SmartGit/Hg is the simplest and best – and gets significantly better every version.

I have no affiliation with Syntevo or their product. This is simply my personal recommendation. I don’t have much money to spend on software these days, being a dad of three kids means I am perpetually poor, but I shelled out for this product because it meant I could use it at work, at home on my hobbies and on my open source projects in Windows, OS X and Linux. Syntevo have genuinely impressed me.

Life is too short to spend all on learning the power of GIT. Do you learn all engine mechanism when you buy a car? Car does solve your problem. To make it easy and powerful , you make different interfaces and abstractions (can achieve in same commandline). To do it, empty your cup and keep your reasoning reasonable to real world scenarios.

I have used mercurial, far easy and does job well than GIT. I don’t know why there is hype over GIT. What I will do with its almighty power, when it takes long to learn.

If GIT does not simplify its workflow and commands, other variant will come out of frustration because the community failed to recognize pain.

Thanks for voicing these concerns, and I believe git is (slowly) moving toward being more user friendly. Some developers tried to force the git kool-aid on me in 2009, and I steadfastly resisted, I was happier living in svn-hell than trying to make the jump to something “worse.” By 2013, I was forcing the git kool-aid on others, and liking it better than svn, it had matured considerably in the meantime and gotten much better integration with other tools.

Keep pointing out the flaws, once people adopt git they tend to forgit how seriously messed up it is, just because it is so much better than what they left. I’m introducing a new group to git as I type this, and they’re feeling the pain. It still could be easier than it is.

For a clean repository after git stash, one can, prior the stash, git add .

This will send all untracked files into the stash afterwards.

It was not a new concept. Git took a lot from BitKeeper which was not only not new but was deeply familiar to Kernel developers after years of use.

I’ve been using git for at least five years now, if not more. The only commands I’ve ever had to use are:

git pull : Get the remote commits

git push : Put my commits

git commit : Create a commit

git checkout : revert a file

git checkout -b : create a branch

git rebase : rewrite my local history to make it look as through the previous commits were always there to begin with. (rarely use this)

People make this more complicated then it needs to be. They either try porting over their concept of how SVN works and try to apply it to git. Or they start making needlessly complicated choices with how they want to manage their VCS.

how does bzr complicate things even more?

through mandatory branch-by-cloning and changing revision numbers as only identifier. At least that was ~1 year ago.

If you are worried about #7 (git push -f and accidental remote branch deletions) have a look at http://luksza.org/2012/cool-git-stuff-from-collabnet-potsdam-team/

So in terms of useful functional for you daily work, what couldn’t Mercurial do that Git could?

One thing I find a bit frustrating in this whole debate is that in theory, interface and underlying capabilities should be separate and interchangeable. It ought to be possible to have a git-like interface Mercurial if you want that extra power (eg, rewriting published history), and it ought to be possible to have a Mercurial-like interface to Git for ease of use. But for some reason (perhaps because of CLI’s dual role in scripting) that never really happens.

Incidentally, notice that “git clean” also by default is disabled: clearly the Git developers are capable of making intelligent user interface choices. But instead of this output:

fatal: clean.requireForce defaults to true and neither -n nor -f given; refusing to clean

how about:

“clean wipes all untracked files from the working tree. Use ‘git -f’ or unset clean.requireForce if you’re sure. Use ‘git -n’ for a dry run.”

Ok, now I’m just dreaming.

Steveko (and a few more people here) are very reasonable in their expectations that in the year 2016 we deserve to have tools with smart, rather than cryptic, user interface. And smart error and warning messages, that respect the user. But the Linux community was always opposite from that. Why?

Well, they’ve spent half of their life learning to use cryptic commands, and now they don’t want them simplified. But why? Here’s why:

1. Now that they have learned everything by heart, it seems easy. They’ve forget their own pain and now don’t understand how others can find it hard to learn. Why wouldn’t you spend a few months on this tool, a few years on that tool, another few years on another tool. After all we know that an average human being lives for 800 years, out of which the youth lasts for the first 750. Right?

2. Now that they’ve learned the tool, they don’t want user interface to change. Because that would mean they would have to learn it again. And feel that pain again… hmmmm, maybe that’s exactly what they deserve.

3. Now that they know how to use the tool, why would newbies have it any easier than they had? Let them go through the same hell. Let everyone forever use crappy UI just because they had to use it. Let in the 24th century Capt. Picard of the Enterprise be blown away by Klingons because the ‘git am’ patch didn’t apply the new photon torpedo launch procedure.

At the risk of over-repetition, tools that provide a friendly and much easier-to-understand front-end to git already exist. My favourite is SmartGit, which I have used for several years, but I am now using Sourcetree at work and I have to say that I am very comfortable with it too. It would be lovely to have the command-line interface tidied up but there’s no great urgency to do so when you have excellent GUIs like these. To everyone who is wrestling with the command-line – destress your life by downloading SmartGit, Sourcetree and GitKraken then picking the one you fancy.

Attributing this to the “Linux community” is false. The Linux community is also the origin of Mercurial (which is feature-equal to git and with a much easier command line interface – it is not only also from the Linux community, but just like git from Linux kernel developers) as well as wonderful graphical interfaces like TortoiseHG and KDE’s Dolphin.

There is a group of people who do not value good interfaces. But they are a subset of computer users, not a subset of the Linux community. And within the Linux community we at the same time have people who don’t value good interfaces and those who value them very much.

> So in terms of useful functional for you daily work, what

> couldn’t Mercurial do that Git could?

Very little. Mercurial doesn’t have the index (modified files are just committed) but it has the “MQ” plugin which can handle the same behaviour. Light-weight branches are slightly more fiddly with Hg bookmarks, but they’re a reasonable approximation to Git’s branches now. (Took long enough though….)

I could not have easily done the CoreTardis-MyTardis history join with Mercurial, but that’s hardly day-to-day.

> It ought to be possible to have a git-like interface Mercurial

> if you want that extra power (eg, rewriting published history),

That would be nice, but the Hg devs would probably prevent any core changes that would allow that behaviour, and I don’t think plugins can influence the wire protocol. Their aim is to avoid lost commits at the expense of “things you misguidedly might think are a good idea”. You might be able to trigger it via remote hooks somehow though.

This is a good example of the mindset of the average Hg user:

http://jordi.inversethought.com/blog/i-hate-git/

“I didn’t even *consider* that a VCS could remotely *delete anything*.”

> and it ought to be possible to have a Mercurial-like

> interface to Git for ease of use.

It’s called Hg-Git. ;-)

http://hg-git.github.com/

Average hg user here…

hg-git is a crutch. I’ve tried it a few times. It sometimes didn’t work at all, and I was unable to push from an hg repo to a git one. I didn’t figure out what the problem was, but the real problem for me is that people use git, and I have to work with them.

The are also other more fundamental problems that git-hg can’t solve, such as how hg stores different metadata (e.g. branch names) that are unrepresentable in git.

I wish I could just avoid using git, I really do, but there’s a whole community of people there who make it impossible.

I use hg-git whenever I interact with a git repo. When I use it for larger-scale development, I use a bridge repo (hg clone git://… git-bridge) so I have one point of interaction with git. For working on something I then clone the bridge-repo, hack on it, push back into the bridge repo and push from the bridge repo into git.

(that’s the old outgoing-incoming repo structure :) )

For merging history, did you check the convert extension? That can rewrite filepaths, get partial history and all that stuff.

If the history is similar you likely don’t even need that:

The honest way (shows the real merge: do this if dishonest history is no requirement):

cd repo1

hg pull –force repo2

hg merge

hg commit -m “merged repo1 and repo2”

The rebase way:

cd repo1

hg pull -f repo2

hg phase -d -r “all()” # allow rewriting published history

hg rebase -s -d .

The regrafting (can join anything, but is more work):

hg init newrepo

hg pull -f repo1

hg pull -f repo2

hg graft $(hg log –template “{node} ”

hg phase -d -r “all()”

hg strip

hg strip

hm, lost a tag to the filter… rebase way again:

and regraft:

hg init newrepo

hg pull -f repo1

hg pull -f repo2

hg graft $(hg log –template “{node} ”

hg phase -d -r “all()”

hg strip [first commit of repo 1]

hg strip [first commit of repo 2]

Your git diagram illustrates how to contribute to a project that you are not an approve contributor on.

How would you contribute to a subversion hosted project that you didn’t have permission to contribute to? Most projects have tight reins over who can contribute, and they would tell you to submit a patch.

These extra steps should be included in your subversion diagram.

My guess, based on admittedly limited experience, is that it was much more common to grant direct commit access on Subversion projects than Git ones. MediaWiki is a case in point: it had dozens, perhaps hundreds of committers. It’s currently switching to Git, and simultaneously switching to the “gated trunk” model with very few direct committers. They’re also using Gerrit, a code review tool, which I understand to be a whole extra source of VCS pain :)

But yes, perhaps I should include patch submission as an alternative path in both diagrams.

The way it used to work when everyone used CVS/SVN was to submit patches by email. Commit access was still pretty guarded, as unknown people can’t be trusted not to be assholes.

Most projects would accept patches by email from new/unproven devs in the SVN/CVS days.

Keep in mind that just because many Open-Source projects does that, doesn’t mean all have to, if you feel more like just allowing people to commit to your repository, then by all means… After all Forks and Pull-Requests isn’t even a Git thing, it’s a Github thing… (and other Git tools tend to begin to follow suit, like Stash)

I see it as a great feature…

Just allowing commit access to your repository is fine as long as there are few contributors, but how will you ever make sure that your projects stays on it’s track towards it’s vision if you allow thousands of contributors commit access?, even open source projects need someone at the rudder… I know from a “patchers” perspective, it may be a bit cumbersome, but as the tools get along it gets easier and easier to work this way, and today it’s mostly done with a single click of a button… All the commands are neatly hidden beneath…

Turn it around, many/most open source projects are handled in spare time, giving the repository owners more tools to manage what is accepted into the project at the expense of the developers time may seem ridiculous at first. But for each 15 minutes you may spend extra, you may save 5 minutes of the owners time… Try to multiply that by a thousands… If it becomes to big of a task to accept incoming patches, they bulk up and some of them will never be accepted because there simply isn’t enough time…

The Github model speeds that up for the owners by an incredible huge factor… Yes at the expense of your time… But in the end it means that more contributions are accepted in the end, doesn’t that serve everybody’s interest?

Agreed, it is complex.

#7: Contributors with push access need to be painfully aware of what forcing a push does. It’s pretty rare that you should have to use it. If private hosting with Gitolite you can use ACLs to prevent rewinds on branches; a force push being an extreme form of rewind+ff. It’s a pity this isn’t possible on Github. However it’s worth noting that the old history is probably still available in your reflog and in other people’s clones.

#9: Much the same applies to rebasing. It’s a really useful tool. Many SVN users procrastinate committing their work before they are 100% happy. That’s a complete anti-pattern for VCS. Git encourages you to commit frequently with the promise that you can tidy up later. The only rule of thumb being that you shouldn’t rebase a commit that has already been pushed; which would require a force.

#10: That’s a poor comparison. You assume that the SVN user has direct commit access to their own project but the Git user is collaborating with third-parties on Github.

Re: #9, I think the same “anti-pattern” applies to Git. The difference is with Git you commit frequently to your *local* repository. If you frequently commit small, poorly-defined chunks of work to a public repository you will soon receive complaints. And while Git “promise[s] that you can tidy up later”, for novice and intermediate users, that tidying up is extremely difficult. (Witness the lead maintainer of a Git hosted project confessing insufficient “Git-fu” to cherry pick a series of commits here)

Re: #10: Intentionally, because SVN is associated with a culture of direct committing, and Git isn’t. (And because that’s my direct experience based on three projects I’ve worked on that switched from SVN to Git.)

Your assumption that those local commits have no value is wrong. They ability to locally commit and use it as a worklog is fantastic, and if you fix a bug you can just cherry-pick it to a production branch from that local branch. Following on that, the idea the squash and cherry-pick are complex commands is borderline insane to me, they are both exceptionally straightforward, some of the easiest parts of git to learn… it sounds like someone has never bothered to try them if they are having issues with them.

Seriously, let me teach you git cherry-pick. Find the commit you want… note its hash. Checkout the branch you want that applied to… git cherry-pick … you now have enough git-fu to cherry-pick.

Regarding cleaning up existing commits (extremely difficulty according to you). So, lets say you have done 8 ugly commits, and you want to clean them up…. git rebase -i HEAD~8 … it will combine those into one commit and pop up your editor with all your commit messages allowing you to summarize / write-up whatever you want.

I suspect people are confusing git being hard, with an absolute inability to be bothered to learn the tool.

I’ve used ‘git squash’ (or rather, ‘git commit’ in interactive mode), and find it unintuitive – I rarely get the result I want on the first try. The problem is that “squashing” a commit really means combining it with another – but I never remember if it combines it with the previous or next commit.

‘git cherry-pick’ is fairly straightforward, and works – but usually feels like a workaround when something has gone wrong.

“I suspect people are confusing git being hard, with an absolute inability to be bothered to learn the tool.”

Not in my case.

Understanding that mq is hg’s answer to the index/rebasing/etc. –

that’s a problem for me, because I find mq pretty awful. It amounts to a new VCS, except its interface is not really more usable than git. So why am I using hg, again? Even if hg is easier and saner at the beginning, if you need staging then git very quickly becomes easier because hg requires you to use mq to work on your commits, while git allows you to use a pretty good VCS to work on your commits (namely, git).

I should not be forced to start proliferating working copies just to put together clean commits to a public repo, that is insanity.

Why use mq at all? Hg doesn’t force you to have a stage area as git does. I have never needed something like this and I’m pretty fine this way.

The workflow should be kept as simple as possible. I think that #8 “Burden of VCS maintainance pushed to contributors” is particularly interesting.

Great article!

One of the main points of git is that maintenance is pushed to the edge, it allows for greater scaling. Imagine if Linus had to do the house keeping for every coder on the kernel; nothing would be achieved. It’s not a bug, it’s a feature.

It’s a system which is easy to use for maintainers but hard for contributors and which places most of the workload onto the contributors.

Shouldn’t a system which places more work on the contributors be especially easy to use for contributors?

And then there is http://fossil-scm.org/ with is a pleasure to use.

Here’s another vote for Fossil! The genius behind SQLite wrote it too, so it’s got good genes.

I’ll get yelled at for this one, but before all of these systems there was BitKeeper, we’re still here and if you want the power of DVCS and not all the gotchas of a sharp and pointy Git, check us out. Expensive but we do stuff that you can’t do in Git (yet, I’m sure they’ll catch up).

The annoying thing about VCS, compared to say, an editor, is that the basic rule of “if you don’t like it, use something else” doesn’t apply. (So in my case, since I never start open source projects, I’ll never get to choose a Git alternative.) It’s a pity the DVCS authors haven’t been able to separate interface from infrastructure, coming up with a robust information model that different front ends could support.

I wouldn’t mind paying for bitkeeper if you made it free. I have paid for free software, but the non-free stuff you did to Linux about forbidding reverse-engineering the protocol is unforgivable, especially if you plan to keep on doing that.

I’m not sure what we should have done, even after all these years. I suppose a better answer might have been a stripped down free version (no guis, no subrepos, no binary server) and a for-pay enterprise-y answer. In retrospect, that might have been a better answer.

As an engineer, I didn’t much care for that answer, I was trying to help Linus and his team and I wanted them to get the good stuff, not half of the answer.

As for not reverse engineering the protocol, I know everyone hates that but we were trying to protect what little business model we had. Freely admit I went about it wrong, though given the goal of trying to help Linus and have a business, even now I don’t have a better answer.

I wonder why the open source community (ok, RMS) reacted so vociferously to the use of a non-free VCS like BitKeeper, yet they seemingly have no problem with the non-free VCS hosting site GitHub. GitHub is making squillions off hosting Git, yet as I understand it, the Github code itself is closed source.

Well, I’m personally not ok with it. I had to do this:

https://bitbucket.org/site/master/issue/3054/make-bitbucket-free

Oh, and I’m not the only one who is not ok with github’s non-free nature:

http://programmers.stackexchange.com/questions/129152/are-there-open-source-alternatives-to-bitbucket-github-kiln-and-similar-dvcs

http://mako.cc/writing/hill-free_tools.html

@steveko: In a nutshell, if github turns around tomorrow and demands money, or it cuts off access, then everyone who currently uses github will simply switch to another repo for upstream. By git’s nature, everyone already has a copy of all the data anyway.

/jordi;

You summed it up perfectly, Jordi. ;)

I Agree!!

I’m still trying to figure out how to use Git. It’s just not logical … well for me at least. Most of the time I’m the only one working on it and just want to be able to securely store it off-site at my hosting provider with the ability to roll-back if needs be – Simpler would have been much better

The very defensive counter arguments from self proclaimed powerusers, is very shaky. There are just so many permutations of potential error and problems with Git that even power users are most likely ignorant of just how many errors they introduce into thier beloved system.

Reminds me of the DOS WordPress defenses.

Glad to find out about Mercurial

Heh, what you expect from “below average” Torvalds, who created such rubbish as Linux?? :) He writes as he thinks.

Mercurial, having the same powerful features, looks way more professional.

I’ve got nothing against the approach that led to Git being created. I just wish that:

Since git relies on shell scripts for extensions, you cannot actually change the commands without breaking everyones scripts…

That porcellaine is pretty much set in stone, but the name fits since it makes everything built on it extremely brittle…

How are you forced by git to make feature branches? If you like you can work directly in mater and never create any branches. Or, if you can prefer, you can make all your changes in the same branch (and then pull that into master, this might be good if you are using github).

I hope that at least some people here are able to distinguish between “git” and “github” as well as being able to distinguish between “a good idea” and “being forced”.

Why should you need to change the commands?

In general, when dealing with an interface of any sort, you never change existing interfaces, you only add new interfaces. You do not even deprecate old interfaces until after you have addressed most all of the needs being addressed by the older forms. (And deprecating basically means you go for some period of years with the old interfaces working, but setting off warnings when they are used.) In some cases you can get away with deeper changes (like when there’s an accepted spec and you are living within it), but you need to be careful — that way lies madness if you go too far. Actually, introducing new interfaces can get crazy also. The most useful thing you can do with most interface changes is to reject them…

Still, when you have a good interface change, and you’re ready to deploy it? You can do that.

For example, currently git clown will not do anything except ask whether you meant “clean” or “clone”, but if you had a good interface design for git clown, nothing would conflict with your implementing it.

Of course, and this is worth repeating: then you have to ask yourself, why anyone should need a “git clown”…

Git, despite its protestations, is a social tool. Decisions like these “If you like, you can do X” are rarely up to one person – you need to do what the team does.

This implies that solutions to these issues need to be social rather than technical.

Because if you are not already used to git, the current commands are pretty unintuitive and hard to learn.

If you want an unchanging interface, you have to design it cleanly from the start and be extremely conservative with changes. Otherwise you get help output as the one seen in `git help pull`, because you can never throw out a bad idea later on.

And the easiest way to state that you want an unchanging interface is to tell people to use it in scripts. As soon as you have enough users, every change to an existing option wreaks havoc to so many scripts which you do not control, that you cannot actually do the change.

Arne is confusing the API, where your semantics are fixed once you name them, with user interface. The fact that the API and the commands can both be accessed by the shell *is* confusing.

“Git history is a bunch of lies” – I second this one – we do have to lie as well so we can have a clean history! It’s not acceptable!

This kind of simplified FUD is just not constructive. Anything you can do in subversion you can also do in git.

Try to maintain a large project in Subversion before you say such things. Its a horror and all the simple things about the subversion api is just not worth anything.

No, FUD is what it is.

In git the regularly used simple things are hard and the seldomly used hard things are simple.

Your answer belies the exact mindset which makes git so unwelcoming.

Actually to maintain a large project in SVN is quite easy – you can easily checkout partial bits, merging and branching can apply to sub-sections of the repo (which is why svn merging isn’t quite as good as gits, it has to deal with more complexity). I worked on a 3Gb repo at a previous company, without any problems.

Part of the problem is that no-one considers the cases of a system they always prefer to spread FUD in favour of their preferred system. Don’t criticise someone else for spreading FUD and then do it yourself!

And *this* is why I avoid open source like the plague!

Don’t. There are thousands of great open source developer tools out there. The Linux and Gnu communities have a particularly unfortunate, and highly influential attitude towards how people should use and develop software – but not everyone subscribes to it.

Hey, you’re using open source right now. And Git may not always be convenient or intuitive, but it’s a tool and it’s available for free use.

Just have a look at Mercurial. It shows how to do DVCS right.

Git has beautiful technical design but quite appalling usability design.

You can Commit, Fetch, Merge, Publish, Pull, Push, Rebase, Stage, Stash and Track. To a beginner these all sound like they might do the same thing.

#6 and #8 and #10 strike at the crux. git is thoroughly decentralized, to the point where everyone is a peer. Everyone is a committer, everyone is a maintainer. Branches and tags are unshared by default. Someone could potentially interact with many other peers, and hence have many “remotes”. To some degree, this level of decentralization is just a total paradigm shift. As others have mentioned, Mercurial is more moderate in this way.

No argument from me about the learning curve and negative usability of the information model, documentation, and commands.

“Everyone is a committer, everyone is a maintainer.”

Well put – humble contributors never had to be maintainers before.

SVN is still around, there’s a reason for that.

«Visual SourceSafe Is still around, there’s a reason for that.»

And for both that reason it’s called “loss aversion”.

You mean “transition costs” rather than “loss aversion” I believe. And that’s a perfectly valid reason to stick with something if the benefits from switching aren’t high enough.

A rubbish article, compare apple with orange. Please identify what you are comparing? Distributed VCS or Centralised VCS, you should either present how SVN being use in distributed environment in order to compare with the Git having the same usage, or present how Git being used in centralized environment in order to compare with SVN having the same usage. This will benefit newcomers to learn how thy should use them and which tool is more suitable.

one of the best complaint about GIt I’ve ever heard, I completely agree with you !

I can even add :

1/ no decent IDE support (for ex, in eclipse Git has a completely different GUI from svn or cvs : where is my commit messages history, the possiblity to ignore untrack files, …)

2/ once you’ve screwed something, it is really hard to go back.

For ex, if you fetch then commit then push … OMG you’ve forgoten to merge so the remote repo say “no, you can not commit” but this can be a real pain to merge you own local copy with “itself”

I think you’re looking for “git merge master origin/master” in between the commit and the push.

For my code to be safe I have to feel in cotrol of it. With git I feel lost and insecure. How safe is my code?

Glad to see I’m not alone with this feeling…

For my code to be safe I have to use a tool that safeguards my code from the ground up, and I must use that tool properly. Feeling has little to do with it, but nevertheless, with git I feel powerful and completely in control. How safe is my code? I have never lost any code with git. Glad to see I’m not alone with this feeling…

I like git. I have used SVN, TFS, and though shamed to admit it, VSS (against my will).

Here is an assessment from my point of view.

TFS – Hands down the best option for a large team of .NET developers working a single project. However I find it’s overkill in smaller project with say 1-5 developers. It’s just too involved.

VSS – Total Crap! if you use this product willingly, you should be drug out into the street and shot. This is where I could go an a rant but I wont. Just google VSS and you will see.

SVN – Great open source source control. There is not a lot to complain about. There are a lot of great add-on’s available for visual studio as well. As a mater of fact my home development server ran SVN for years. However, I did switch every thing over to git. My issue with SVN is the same as my issue with TFS, It’s just too involved. You have t have a server just like TFS. There is administration work involved just like TFS. I didn’t realize I could git by without these tasks until I started using git. Like TFS though, it’s a better option for larger teams.

GIT – Fast, Flexible, and easy. It’s takes me all of 2 seconds to set up a new repository. I have retired my “development server” and just use Dropbox, Github, or Bitbucket (Check out Bitbucket if you don’t allready know about it. The are just like Github, but you can have free private repos) as my remote. It’s so fast and simple that for me it takes the work out of source control. I do feel that TFS or SVN is better for larger groups though due to all the Administration options available. There are just more ways to manage them that are not really necessary for smaller teams. The other downside of git is that some folks are afraid of command line tools for some reason. My advice to this person is to grow a pair and learn the tool because it’s worth it. You won’t find me talking that way about any other command line tool period and I have to use svcutil, stsadm, and a couple of others out of necessity. I don’t go around talking them up because they would be better as GUI tools in my opinion. With git I feel this is not the case. The BASH makes it faster which is what I truly love about git to begin with.

That’s my 2 cents. Take it for what you will.

-Adam

As someone who is forced to use TFS in a situation that TFS wasn’t made for (non Visual Studio work), I loathe it with a passion.

I’m sure it’s great for VS users, but us linuxy guys get totally shit on.

And as a related note, who the hell thought it was a good idea to use the write bit of the permissions as the is-this-checked-out flag?!

git follows a simple unix philosophy: make it scriptable.

and also “do one thing, do it well” (this applies to the individual parts, git is made of a bunch of smaller programs that make up the whole suite not just one monolithic program)

all the command line things are made to be scripted and if someone wanted to make a simple GUI for git they could, just no one care enough. just about everyone who uses it, and everyone who uses it and has the capacity to make the gui, don’t care enough to make one. they are fine with the script and programs that make up the git suite.

also you mentioned above the basic commit:

git add

git commit

git push

you can script that to have one script do all those, call it “checkin.sh” or something

#!/bin/bash

(git add && get commit && git push && echo “done”)|| echo “failed”;

anything you find yourself doing often can be dumped into a script, that is the philosophy behind it, leave matters of interface to other people, design the system to be fast, handle merging well, be distributed, etc but allow it to be scriptable so people can build interfaces on top of it to their hearts content.

I actually think git fails on both those counts.

But yes, you can obviously script your way around anything for one local environment. Not a great solution, in my experience, if you’re frequently working on different environments, and in different teams. (“You’re having trouble committing? My “gitcommit” script runs just fine…”)

By making it scriptable using the basic commands, they pretty much barred their way towards ever having a simple interface.

If they change one of their core commands in a backwards-incompatible way, all user-scripts break. And this already happened (there was a simpler git ui whose name I forgot. It tried to wrap around the git commands and regularly broke down because of some incompatible change).

You cannot be scriptable, fast evolving and easy to use at the same time.

“You cannot be scriptable, fast evolving and easy to use at the same time.”

You can if you use good abstractions for your API. But that requires more careful thought up front….

Thank you for this. I’m struggling with trying to contribute to a GitHub hosted Open Source project. I thought it was me. Glad to know it’s git.

Pingback: 我痛恨Git的10个理由 | 曹志士

Pingback: 我痛恨 Git 的 10 个理由 - 博客 - 伯乐在线

One day on a big project using GIT I got an email saying not to commit any changes or pull anything because the repository had been inadvertently reset to the state it was in three weeks before.

It appears a developer had returned from holiday and having found it hard to merge their work simply forced a push.

This could be seen as an argument against git. I suspect Management would consider it an argument against holidays.

I cannot think of anyone who has used git and liked it.

Could someone point out what advantage a distributed version control system has over (say) a clustered SVN system?

They should have enabled the non-ff option on the remote – which is there for precisely this reason.

Anyway, to answer your question, if you’ve ever had to work on a less than 100% available link, or a link that’s slow and/or expensive, then having git is invaluable.

In a more prosaic setting, I’ve found found git awesome for being able to track an upstream project with git, pull in and review their changes at regular intervals, and apply my local platform changes over the top.

I don’t even know how I’d approach that with subversion without a whole bunch of jiggery-hackery.

Right, well I wrote this blog post in a fit of rage at the crappy user interface decisions one has to deal with; I’ve never really doubted the great benefits the underlying Git infrastructure has to offer, with its superior branching and merging features. The user workflow could be still improved further though: on one project, I’m now in the situation of having to create a branch, commit code, push, then issue a pull request, then later delete the branch (and push the deletion) for every minor feature – sometimes as few as 2-3 lines of code. This could definitely be streamlined – but Git wasn’t written for contributors, it was written for maintainers.

It used to be that you’d contribute your changes upstream, and people would work to produce stable, well-defined, documented, clean projects.

Now everybody just hacks and slashes there way in, and then proclaims git to be a godsend because it enables work-generating decisions like “I’m (and everybody else) is going to maintain a fork forever”. So then you wind up wasting time, forever, instead of just paying the up-front cost to design software properly.

In terms of market share, Linux is a success, In terms of technical quality, Linux is an unmitigated disaster zone of low quality code, failed designs, and rewrites. Git was built to support that development model.

“I’m now in the situation of having to create a branch, commit code, push, then issue a pull request, then later delete the branch (and push the deletion) for every minor feature – sometimes as few as 2-3 lines of code. This could definitely be streamlined – but Git wasn’t written for contributors, it was written for maintainers.” — That’s not quite fair; part of git’s core design involves accepting emailed diffs as full patches. Pull Requests (and the concomitant branch mania) are a relic of the Github Era, not Git itself.

I would agree, if I did not have Mercurial, which does everything git does while being easy to use.

That is a serious reason not to use it. The whole point of a SCM is to protect your commits. Anything else is just gravy.

I’ve worked at a place where every so often someone would pop up to the git guru and ask for assistance as they thought they’d just trashed their work. That might be ok in an open source situation, but totally unacceptable if you got paid to write it. I’ve used git for a project and all I did was commit and push to master (it was just me using it) but even then one day I found it had pushed to a branch and required a pull… I still have no idea how I managed that given my workflow.

Maybe when the SVN guys add shelving, the reasons people use git will go away, but I doubt that. The reasons to choose it seem more political than anything else. Maybe someone will create a ‘centralised SCM for decentralised teams’ that had the ease of SVN with the convenience of git.

“It appears a developer had returned from holiday and having found it hard to merge their work simply forced a push.”

This is not a git issue; it’s a management/training issue.

First time that happens, send the dev to be retrained, and restore from backups.

Second time, fire the dev for incompetence.

Yep. With enough training, all software is perfectly usable. :p

I think this may be the most hopeless defence of a system since Ribbentrop’s Nuremberg testimony. I hope your attitude isn’t prevalent in nuclear power stations!

As many here have tried before, you’re blaming the user not the interface. If your safety system is reliant on everybody knowing everything the self-appointed best coder knows, and having a backup system that is somehow bullet proof (and budget proof), and moreover that fear of dismissal is your primary data protection method, your company is quite clearly a terrible place to work. (although eventually self-correcting). It’s important to try and grasp the notion that humans are not meant to program computers. We’re bad at it. Your personal vision of yourself maybe a pointy eared vulcan, watching reams of matrix like code on six monitors, CSI-style speed typing away without a bug in sight but that’s not a terribly robust approach to software design for the majority. Also, we’re not actually discussing code here, the primary reason you employ a coder, but a support system, designed to make life easier.

Obviously I’m biased, as a games developer, but software developers or computer scientists who don’t understand or dismiss the importance of a good user interface are a menace to everybody working alongside them, simply from an employment perspective. In the shorter term, it should be obvious that a source control system, designed to transparently protect historical versions of code and resources, that encounters so much UI criticism, and that requires *such* a large body of non-coding knowledge in order to use without concern in day to day tasks, requires at the very least an overhaul. I would argue everything from terminology to failsafe conditions could do with some renewed thought. Like the SA-80 rifle, raw power is somewhat undermined if the tool sometimes kills the user.

Use SmartGit, Luk.

SVN is great as long as 1) you have a fixed set of developers, 2) you don’t do much branching. Git supposedly – I don’t have much experience with it myself – makes exactly these things (“anonymous” commits and branching) easy.

If you want to include contributions from anyone, then the simple “update, code, commit” workflow turns into something like “update, code, diff >> foo.patch, find bug tracker/mail address/whatever that project uses, open issue/write mail, attach patch, submit, download patch, apply, commit”, which must rely on big complicated hard-to-automate stuff designed for completely different purposes (like a mail client or a web browser), and most of the easy-to-automate stuff (like syntax checking and unit tests) can only be run after all this has been done manually, so integration becomes a huge bottleneck.

If you need to merge between branches, as far as I understand the information model of SVN is just not really good for that, and so you are continuously confronted with stupid behavior (such as your own commits causing tree conflicts when you merge them back from trunk), and operations including deleting and renaming files can become quite destructive. (Worse, they often seem innocent when performed and become destructive when merged – e.g. if you delete a file and add some other file with the same name, that will be fine as long as you make them in separate commits, but when someone tries to merge them in at once, all hell breaks loose, and usually you have to delete the whole working copy, check it out again, find the offending commits – great fun if you were merging half a year’s worth of work! – and merge in multiple steps so that they do not get applied together.)

Yup. SVN is really not at all suitable for distributed open-source development. I have not tried, but I can well imagine the excruciating pain and inconvenience that it would cause.

Having said that, for small, co-located teams undertaking typical development activities in an SME-like environment, particularly when one or more team-members do not have a software development background, ease of use is paramount.

When coupled with TortoiseSVN on Win32, Svn knocks the socks off command-line Git (Although in my mind SVN could be made simpler and less intrusive still).

Create an organisation on github and your problems will be solved. It has been two years when I moved my colleagues onto git and github. None of them are programmers, and none of them had any experience of git or for that matter versioning systems. Now everybody is happily working with no problems. The workflow is nothing more than git pull, git commit -a, git push.

Sometimes new branches are created, then you need to say precisely onto which branch to push, and you need to use git checkout to switch between branches. In two years, I had only one cock-up, but after 10 minutes of swearing I fixed the problem in 10 minutes with no changes lost. The only thing which bugs me a little bit is merge commits in history. I could do something about it, but I am too lazy.

As a maintainer sometimes I had to dive into deeper waters, but I had no problem in finding information how to do that. I also prepared mini documentation for my users, which contains everything they need to know.

Yeah, the problem with advice like this, though, is that it comes from the perspective of a codebase maintainer. As I noted in my post, Git is written by, and for, VCS maintainers. My frustrations mostly stem from having to adapt to existing GitHub repositories: even if creating an organisation solves issues (I don’t know), it’s not something I could do.

If none of your users are programmers, you’re obviously not a typical case. What are you maintaining – documentation? Do you worry about keeping a clean history, encouraging your users to rebase? If not, that’s also atypical. (I’m not trying to discredit you; I just want to clarify the situation on which my post was based, that causes so much frustration).

Well I call myself maintainer, but I am really only the most knowledgeable user, which pays for private repositories. Several projects are running happily without my intervention, I only participate in them for my real work, statistical consulting.

I work on statistical projects, and git is used for sharing R code and data. I do not worry about clean history, because I did not find it useful. As I said merge commits are annoying, but they do not interfere with blame history, so I can track who did what.

And yes I understand that my setting might not be typical. But my experience contradicts several of your points made. Hence the comment.

I think you highlighted an importan point here. The maintainers of the code base /repository should send a one page manual to the users / contributers that includes all the settings during setup, the commands to make changes etc..

Pingback: fl0blog » Blog Archive » Learning git

Thanks Steveko..

The ironic thing is your instructions and especially your diagram and helped me understand what is going on..

Your diagram should be included in any git tutorial / manual.

Yeah. Because Git documentation tends to focus entirely on the relationship between your working directory and your local repository. It glosses over the relationship between your repository and remote repositories, even though that’s where much of the complexity in DVCS arises.

it’s because the Linux kernel developers don’t really use shared remote repositories I believe. My impression is they instead each have their own repo and either email patches or ask others to pull from them. This quirk of how kernel development works means they do things differently from most git users. But some UI decisions were made with the kernel workflow in mind.

Pingback: Free GIT repository hosting | rizwaniqbal.com

#7 is simply false. Git *never* destroys anything. It only adds. If you do anything you cited on your article with the repository, you could always use reflog and checkout to a pre-destroyer push.

denomus, it is a recurrent myth that git doesn’t destroy data. It does. garbage collection destroys data. push –mirror can destroy refs, another kind of data (the reflog may only store hashes, but not the actual names of refs, which can be useful metadata). History-editing operations like a bad rebase can also destroy data.

Most “common” uses of git don’t destroy data, but if you’re learning git, it’s not that difficult to destroy data accidentally until you learn to not do it.

Well, I agree with garbage collection. But you can recover yourself from a push –mirror or a bad rebase checking your reflog (unless it has already been garbage collected).

But you have a point. Git is not a mere toy, and you have to take care of some things to make a good use of it. I just don’t think this is as common as the complainers imagine it to be.

It is also not a safe tool. And that’s an unnecessary problem.

Speaking from my heart. Very good summary. Thank you for that

Amazing post! I totally agree with most of your statements. I am still a SVN/VSS user, but I also really like git; For One reason only. I care for the fact that I can use git commit without an internet connection, obviously can’t push without internet but I work on a lot of projects alone and on the go. So I like to be able to pull from my repo anywhere with connection.

Because i have a home server, which is also my php server as well as my svn remote repo, I wanted to configure git with my current xampp virtual host setup. But having been at it for around 4 days now, looking at a lot of tutorials and blogs about setting up serving git over http. It is just so damn complicated and not user friendly. I got to the clone stage over http but just can’t get the push to work. Setting up svn under my xampp was extremely simple. I wish git was simpler, but it just isn’t.

I wish I could use git for my primary source control but because of its complicated and the “Git’s hard, deal with it” part. I just gave up.

But I like this article because its honest and shows a different perspective from the git enthusiast/fanboys blog post and comments, git is not for everyone.

For me I find Git slow as hell a lot of the time compared to SVN. I am using Git because it is as I am told “better” but I am waiting several minutes for commits to complete when SVN would have taken seconds.

Really? What’s the setup? Where is the repository and how big is it? I’ve never heard Git criticised for speed before – other than the initial clone, which can be very slow.

You have quite a few very valid points about Git’s usability and I won’t argue most. However, you are being overly kind towards subversion. I currently am a git-svn user and I have to deal with subversion’s limitations on a daily basis: extremely slow checkouts (think extended coffee break), my svn work directory is 4 GB, my .git directory with full history is 600MB and another 500 MB for a checkout. We have a rather messed up corporate intranet that makes interfacing with the remote svn server quite painful, especially the latency is an issue. Branching and merging requires a single person dedicated to that job. That is both stupid and costly if you are used to git. Most of the non git-svn users in our 50+ people team are eternally deadlocked on such backwards concepts as code freezes, elaborate and really messed up branching strategies, having to work one commit at the time, conflict resolution and waiting for ages for our build servers to churn through our builds. Our use of subversion is a poster child use case for why using subversion is a bad idea. It wouldn’t be fair to blame subversion for all our problems, there are many things that need fixing in this team. But it is certainly a big part of it.

Git-svn has liberated me from that madness. I can work for weeks on my private branches (while keeping up to date with the latest changes in svn) and routinely git svn dcommit large changesets. I actually use a remote git repository for storing and sharing my branches and commits (we have a nice github like facility where I work). Scares the hell out of my colleagues because they are not used to seeing that much change in a short time frame appearing in svn. But I do my due diligence of making sure all tests pass before I dcommit so no harm gets done. Basically by the time I dcommit to svn, my work is in a releasable state. Piling up large changesets and testing them in one go would be painful in svn, which is why people don’t tend to do feature branches in the svn world.

But Git is definitely hard to master and there is a quite high barrier for getting started. I struggled with it myself and I’ve seen other people struggle with it. It doesn’t help that it has a large overlap in the names for certain commands with svn because the semantics of those commands are quite different in the git world (e.g. git revert).

However, the reason git is rapidly replacing subversion as the vcs of choice (most OSS projects and many corporations) is that overall you are better off with git than with svn. It enables teams to change their work flows and not be blocked on a central resource (i.e. svn). Changing the work flow is essential because it is entirely possible to use git like you would use svn, which is not a way you are going to get much out of git.

Especially with larger teams changing the work flow is a very big deal. I agree it may seem like overkill if you have been treating your VCS as a glorified file server that you use for backing up work in progress. Which is pretty much the way most engineers tend to use it (sadly). But then, if you work that way, maybe you should consider using a version control system properly. If you use subversion properly, you’ll find that most of what you do is faster, less painful, and less error prone in git. You’ll be doing stuff you wouldn’t even dream of doing in svn because it wouldn’t be worth the pain. Fear of branching and merging is a wide spread thing among subversion users. And for good reasons: subversion absolutely sucks for these activities and you can seriously mess up a code base with a botched svn merge, thus blocking your team. The hard part of using git is learning to merge and branch properly and then unlearning that these activities are somehow dangerous, tedious and scary. They’re not. Everything is a branch in git. Your local repository is a branch. The remote git repository is a branch and if you use git-svn, svn trunk is just another branch. Git is a merging and branching swiss army knife.

So, if you are stuck with subversion, do your self a big favor and learn how to use git-svn. I’d say career-wise picking up some git skills is essential at this point.

Yeah, I agree with basically all of that. SVN is easier to use, Git is more powerful. What motivated this post was the realisation that that trade-off is not inherent – it’s simply poor interface design on the part of Git. There’s no inherent reason why Git arbitrarily uses “repo branch”, “repo/branch” or “repo:branch” notation in different places.

In my sector, SVN is basically dead – no one (myself included) really uses it by choice anymore. Partly as a result of this post, my Git skills are actually fairly decent now – because I’ve spent the time to work out the different use cases, and get my head around Git’s torturous information model.

And yet I still constantly rub up against its irritations, and find fresh ones – like why on earth repositories don’t actually have names. The model of “I name your repository whatever I want” is much weaker than “your repository has a name which you gave to it”. Default names like “origin” work out horribly in practice (I soon forget from which repository I originally cloned), meaning you need to keep inventing procedures and workflows to avoid making mistakes – not the sign of a well-designed tool.

Distributed naming is very hard. What if you have two people named Steve who decided to call their repo, “Steve’s Repo”? The moment you have to start adding disambiguating information, you start ending up with something that looks like a URL: i.e, git://git.kernel.org/pub/scm/linux/kernel/git/tytso/ext4.git. So if you want a globally unique name, that’s it. If you want to create a short name, then it should be up to you to choose what you want as a short name.

That’s true if you want zero chance of clashes. If you relax that constraint (with the fall back position of URLs) then you could improve usability. Imagine this:

$ git remote add http://github.com/steve/my-repo

Repo "my-repo" added.

$ git remote add http://github.com/tytso/my-repo